Dec . 05, 2024 09:51 Back to list

Applying Particle Filtering Techniques for Enhanced Data Estimation and Tracking

An Introduction to Particle Filters

Particle filters, also known as Sequential Monte Carlo methods, are a class of algorithms used for estimating the state of a dynamic system that is governed by a probabilistic model. They have gained significant traction in various fields, including robotics, computer vision, and finance, due to their ability to handle non-linear and non-Gaussian state estimation problems effectively.

How Particle Filters Work

At its core, a particle filter approximates the probability distribution of the state of a system using a set of discrete samples, termed particles. Each particle represents a possible state of the system and is weighted according to how well it explains the observed data. The process can be broken down into several steps prediction, update, and resampling.

1. Prediction In the prediction step, each particle is propagated forward in time according to the system's dynamics model. This step accounts for the uncertainty in the system's evolution and introduces noise based on a defined process model. As a result, the particles spread out in the state space, representing various possible future states of the system.

2. Update After the prediction, the filter incorporates new observations to adjust the weights of each particle. The weight of a particle is computed based on how likely the particle's predicted state would produce the observed data, usually through a measurement model. Particles who align closely with the observations receive higher weights, while those that do not conform diminish in importance.

3. Resampling To prevent the particle set from degenerating—where a few particles hold most of the weight while others become irrelevant—a resampling step is employed. Particles are drawn according to their weights, effectively focusing computational resources on the most probable states. This step is crucial to maintain diversity among particles and ensure accurate state estimates.

Advantages of Particle Filters

particle filter

One of the primary advantages of particle filters is their flexibility. Unlike traditional Kalman filters that assume linearity and Gaussian noise, particle filters can accommodate a wide range of system dynamics and measurement characteristics. This adaptability makes them suitable for complex applications like tracking multiple objects in cluttered environments or modeling financial markets where the underlying processes are inherently non-linear.

Additionally, particle filters are inherently parallelizable, which allows them to utilize modern computing architectures effectively. This efficiency is particularly beneficial for real-time applications, such as robotic navigation and state estimation in autonomous systems.

Challenges and Considerations

Despite their advantages, particle filters come with challenges. The number of particles required for accurate estimation often grows with the dimensionality of the state space. In high-dimensional scenarios, particle filters can become computationally intensive, necessitating clever strategies to manage particle numbers or utilize specialized resampling methods.

Furthermore, careful design of the process and measurement models is essential to avoid filter divergence, where the estimates become inaccurate over time. Tuning these models and the parameters of the particle filter often requires domain knowledge and extensive experimentation.

Conclusion

Particle filters represent a powerful tool for state estimation in complex dynamical systems. Their ability to process non-linear and non-Gaussian uncertainties makes them invaluable across various applications, from autonomous vehicle navigation to economic forecasting. With ongoing advancements in computational power and algorithm efficiency, particle filters are poised to remain a cornerstone in the field of statistical estimation for the foreseeable future.

share

-

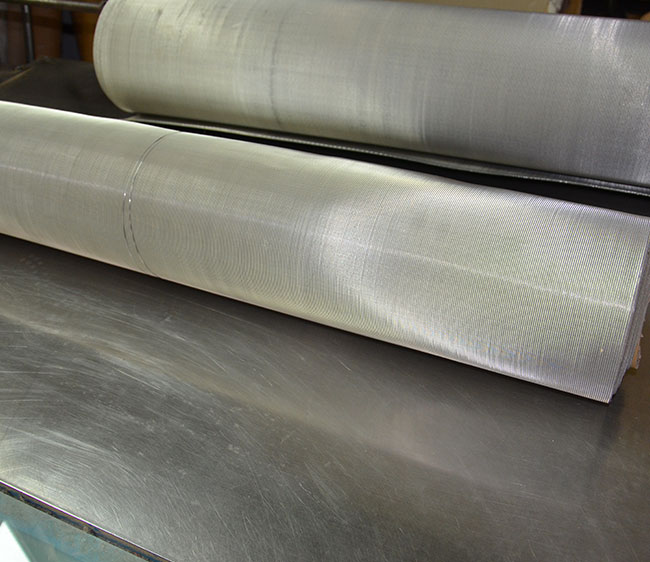

CE Certified 250 Micron Stainless Steel Mesh | Premium Filter

NewsJul.31,2025

-

CE Certification Buy Wire Mesh Fence for High Security and Durability

NewsJul.30,2025

-

Stainless Steel Mesh Filter Discs for Precise Filtration Solutions

NewsJul.29,2025

-

CE Certification 250 Micron Stainless Steel Mesh for Industrial Use

NewsJul.29,2025

-

Premium Stainless Steel Weave Mesh for Filtration and Security

NewsJul.29,2025

-

CE Certification 250 Micron Stainless Steel Mesh for Safety & Durability

NewsJul.29,2025